Guide on Data Streaming in Big Data

What is Streaming in Big Data?

Streaming is a process in which big data is instantly processed so as to extract real-time insights from that. The processing is done while the data is in motion.

It is a speed-focused approach wherein a stream of data is processed. Processing streams can be done by processing “time windows” of data in memory across the servers.

This data demands to be processed sequentially on any record-by-record basis or sliding time windows basis and is used for a type of analytics like collecting, filtering, and then sampling.

Streaming data is an analytic computing platform which is focused mainly on speed. This is because applications require a continuous stream of unstructured data to be processed.

Thus, data is continuously analyzed in memory before it is stored on a disk. Processing streams of data work by processing “time windows” of data in memory across a cluster of servers.

What are the benefits?

Streaming data processing is profitable in utmost scenarios where dynamic data is produced on a continual basis. It refers to the maximum of the industry segments and big data use cases.

Companies usually start with simplistic applications of collecting system logs and rotating min-max computations.

These applications are more advanced for real-time processing. Initially, applications may process data streams to generate simple reports and perform simple actions in reply, such as emitting alarms when key measures exceed specific thresholds.

Eventually, these applications make more complicated forms of data analysis, like applying machine learning algorithms, and extract insights from the data.

Some key principles applicable while streaming:

• It is necessary to determine a buying opportunity at the point of engagement, either by social media or by messaging.

• Getting information about the movement around a reliable position.

• To be able to react to an event that needs an immediate acknowledgment, such as a service interruption or a change in a patient’s medical status.

• Real-time consideration of values that depend on variables such as usage and available sources.

Some real-time data streaming tools and technologies which are available:

Flink:

Flink is a streaming data flow engine which aims to offer facilities for distributed computation across streams of data.

Treating batch processes as a specific case of data streaming, Flink is effective both as a batch and real-time processing framework.

• Flink is integrated with many other open-source data processing ecosystems.

• As compared to others, Flink is more stream-oriented.

• Highly Flexible Streaming Windows for Continuous Streaming Model.

Storm:

The storm is a distributed real-time computation system. Its applications are designed as directed acyclic graphs. Storm can be utilized with a programming language. It is known for processing highly scalable and provides processing job guarantees.

• The storm is discovered for processing very large bytes.

• It is scalable which works on equal considerations that run across a bunch of computers.

• The storm is reliable. It ensures that each segment of data will be processed at least once. Messages are only replayed when there are collisions.

Kafka:

Kafka is a distributed published subscribe messaging system which integrates data streams. It automatically balances consumers at the time of failure which is very much reliable in comparison to messaging services.

• It is Highly Reliable

• This is capable to scale quickly and smoothly without obtaining any downtime.

• This gives High Performance for both publishing and subscribing.

Difficulties in working with Streaming Data:

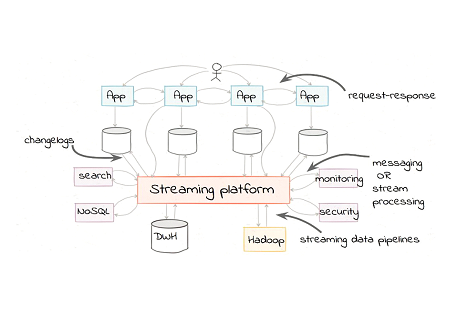

Streaming data processing requires two layers: a storage layer and a processing layer.

The storage layer needs support to ordering and strong density to read and write large streams of data with fast speed, inexpensive, and repayable.

The processing layer which is capable to use data from storage layer and guides to delete data which is unnecessary by running estimates on that data to the storage layer.

We also ought to design for scalability and data durability in both the storage and processing layers. As a result, many platforms have developed the infrastructure needed to build streaming data.

If you appreciate and want to know more about Big Data and Hadoop Distributed File System, click here and read up

Nitesh

Author

Bonjour. A curious dreamer enchanted by various languages, I write towards making technology seem fun here at Asha24.