Introduction To MapReduce in Big Data

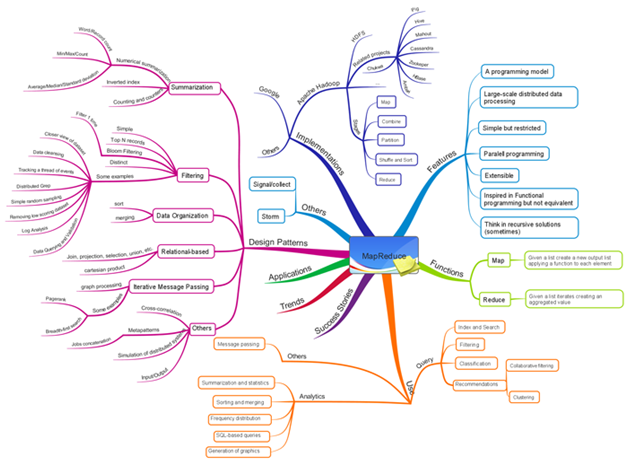

MapReduce is a Programming pattern for distributed computing based on java.

MapReduce algorithm has two main jobs:

1) Map

2) Reduce.

In Map method, it uses a set of data and converts it into a different set of data, where individual elements are broken down into tuples (key/value pairs).

The second method is Reduce task, it gets the input data from the map, (means output of map is input to reduce).

It joins certain data tuples into a smaller set of tuples.

MapReduce’s main advantage is easy to scale data processing over multiple computing nodes.

• Input Phase − Here we have a Record Reader that translates each record in an input file and sends the parsed data to the mapper in the form of key-value pairs.

• Map − Map is a user-defined function, which uses a series of key-value pairs and processes each one of them to generate zero or more key-value pairs.

• Intermediate Keys − the key-value pairs generated by the mapper are known as intermediate keys.

• Combiner − A combiner is a type of local Reducer that groups similar data from the map phase into identifiable sets. It takes the intermediate keys from the mapper as input and applies a user-defined code to aggregate the values in a small scope of one mapper. It is not a part of the main MapReduce algorithm; it is optional.

• Shuffle and Sort − the Reducer task starts with the Shuffle and Sort step. It downloads the grouped key-value pairs onto the local machine, where the Reducer is running. The single key-value sets are sorted by key toward a larger data list. The data list groups the equal keys together so that their values can be iterated technical terms in the Reducer task.

• Reducer − The Reducer takes the grouped key-value joined data as input and runs a Reducer function on each one of them. Here, data will be aggregated, filtered, and blended in a several ways, and it needs a wide range of processing. Once the execution is finished, it gives zero or more key-value sets to the final step.

• Output Phase − In the output phase, we have an output format that sends the final key-value pairs from the Reducer function and writes them to a file using a record writer.

Algorithm:

The MapReduce algorithm having two important tasks, namely Map and Reduce.

• The map task is done by Mapper Class

• The reduce task is done by Reducer Class.

Mapper class takes the input information, tokenizes it, maps and sorts it.

The output of Mapper class is used as input to Reducer class, which searches matching pairs and decreases them.

MapReduce implements several arithmetical algorithms to divide a task into little parts and assign them to multiple systems.

In technical terms, MapReduce algorithm assists in transferring the Map & Reduce tasks to appropriate servers in a cluster.

These arithmetical algorithms may include the following −

• Searching.

• Sorting.

• Indexing.

• TF-IDF.

Sorting:

Sorting is one of the primary MapReduce algorithms to operate and analyze data.

Sorting methods are performed in the mapper class itself.

In the Shuffle and Sort stage, after tokenizing the values in the mapper class,

The Context class gets the matching valued keys as a collection.

To collect similar key-value pairs, the Mapper class takes the help of Raw Comparator class to order the key-value pairs.

The set of intermediate key-value pairs for a given Reducer is automatically sorted by Hadoop to form key-values (K2, {V2, V2,})

Before they are presented with the Reducer.

Searching:

Searching performs a significant task in MapReduce algorithm. It supports in the combiner phase and in the Reducer phase.

Indexing:

Indexing is utilized to point to a particular data and its address. It does batch indexing on the input files for a particular Mapper.

The indexing technique that is commonly used in MapReduce is known as an inverted index. Search engines like Google and Bing utilize inverted indexing technique.

TF-IDF:

TF-IDF is a document processing algorithm which is brief for Term Frequency − Inverse Document Frequency.

It is one of the traditional web analysis algorithms. Here, the term ‘frequency’ refers to the no: of times a term arrives in a document.

Term Frequency (TF):

It estimates how frequently a particular term happens in a document.

It is measured by the no:of times a word shows in a document divided by the total number of words in this document.

Inverse Document Frequency (IDF):

It measures the importance of a term.

It is calculated by the number of documents in the text database divided by the number of documents where a specific term appears.

While computing TF, all the phases are considered equivalently important.

These, are MapReduce algorithms and installation.

Naveen E

Author

Hola peeps! Meet an adventure maniac, seeking life in every moment, interacting and writing at Asha24.